|

Evidence Forensics - Exposing tampered recordings

By Doug Carner (Published

January 2015, all rights reserved)

The defense attorney receives a copy of a police interrogation. It sounds like a solid confession, but what if the detectives paused the recording while they coached the suspect on what to say? Are exonerating statements absent from the recording, even though the defendant clearly remembers saying them?

Does the case involve surveillance video that is missing moments critical to proving innocence? Has photographic evidence been altered? Was image quality altered to hide facts harmful to the opposing counsel's case? In short, when can the attorney trust the discovery evidence to tell the whole truth?

Evidence spoliation and manipulation are serious charges that cannot be adequately answered through subjective speculation. Finding the truth requires an understanding of how evidence is manipulated, how to prove it, and the safeguards available to prevent its occurrence.

At its most basic level, audio-video-image tampering is the addition, removal or relocation of content in a previously authentic recording. This may occur through editing, quality degradation, or modifications of the file's metadata or properties. For example, a file's GPS metadata can be altered to denote a misleading address for the recorded event. With video, a gun can be inserted into the client's hands, or exonerating details removed. In most cases, these types of changes require minimal skill and access to common editing software.

Evidence manipulation likely dates back to the Stone age when cavemen recorded history using wall markings, which may have undergone embellishments to tell a more compelling story. I can imagine Krog running from a saber-tooth tiger, and then recording his day with a much more heroic encounter.

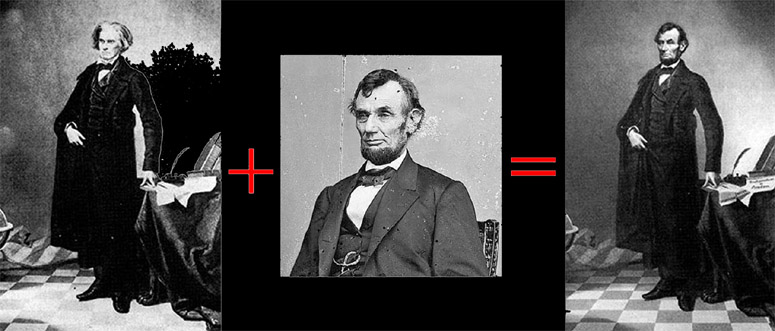

In the 1960s, schools across the country prominently displayed a photographic forgery promoted as an authentic moment from history. I clearly remember this example on permanent display in my grade school classroom. In the picture, Abraham Lincoln’s head had been inserted onto a portrait of John Calhoun (image #1) and reprinted for mass distribution(1). It was an inspirational fake that joins the ranks of so many other forged historical images(2). Due to the limited methods available in that era, this creation must have taken several days for a skilled artist to create.

Image #1: Famous composite of Abraham Lincoln's head painted onto John Calhoun's

body

Today, affordable software allows the unskilled computer user to create a new reality in minutes. For example, Adobe Photoshop™ can remove a gun from a person's hand and automatically correct for background, lighting and shadows to leave a natural looking scene(3). This type of manipulation is called in-painting(4) and, before explaining how it can be detected, it is important to understand how multimedia files are saved.

When a person looks at a picture, they see the complex colors and shapes that create the overall image. The camera sees this same image as a grid of individual points, called pixels(5). Each pixel is recorded as a group of numbers that represent its color and luminosity values. The limited range of values for each pixel are determined by the capture and storage settings of the recording device. The instructions that handle the compression and decompression of the image information are known by the shorthand term, Codec.

Image file formats BMP, PNG, RAW and TIF(6) are typically lossless, meaning that they save the maximum image data possible using the limited available pixel values for their respective format. Most lossless Codecs attempt to reduce the final file size by identifying repeated data patterns, and then substituting a shorter internal token to reference each pattern. Most people have experienced this type of compression when working with ZIP files, which are also losssless.

The alternative, and far more common, Codecs are lossy; meaning that the range of available color and luminosity values are extremely limited. Any color or luminosity value outside these limited choices is substituted with its nearest available alternative. JPG is the most popular lossy image Codec and, when its compression levels are set to be too aggressive, the resulting image can appear unnatural and distorted.

Lossy compression causes destructive quantization errors(7) represented as visual artifacts, or lost audio fidelity, that permanently degrades the details of the resulting recording. If the process is successively repeated, the visual or auditory data will eventually approximate a homogenous blur. This is similar to the detail losses that occur to a fax of a fax, of a fax, infinitum.

Video Codecs(8) are unique in that they remove data both spatially (within each video frame) and temporally (correlations between frames), to exploit the way humans perceive motion. When a seemingly detailed video is paused, the visual deficiencies will become more noticeable.

When the viewing software reverses the Codec, intentional blurring and lighting changes may be automatically applied to produce a more visually appealing video. However, all of these compression and decompression changes significantly alter the validity and usefulness of the visual evidence.

Some of the data losses can be estimated or filtered using software specifically designed for forensic reconstruction. Even so, the resulting video will never be as accurate as what the original camera was capable of capturing. The destructive changes also increase the challenge of detecting potential content manipulation.

Similarly, audio recordings are recorded as numeric values whose accuracy is reduced by the Codec to create a more compact file size. When the audio Codec uses inexact approximations to reduce file size, fine details are discarded.

The recording defects created by the Codec also serve as a watermark, helping to identify the equipment and/or software used to create the recorded file. If those indicators are inconsistent with the equipment that recorded the event, then the file is proven to be inauthentic.

Each type of recording device imprints a unique noise profile onto the files it produces. This noise profile, called PRNU (Photo Response Non-Uniformity), is constant over the life of the equipment and remains recognizable after simple Codec compressions and restorative enhancements(9). The PRNU can be extracted from the recording and then indexed against a table of known values, or simply tested against a controlled recording from the suspected recorder. This is comparable to matching a bullet's ballistic markings to a specific gun barrel.

Another emerging science is electrical network frequency (ENF) analysis(10). The concept is that each power plant generates electricity near, but not exactly at, 60Hz within North America. The exact frequency is constantly changing as a result of changing electrical demands, and those frequency values have been documented for decades. By measuring the underlying 60Hz frequency from a recording, it is possible to determine when and where a recording was created. The carrier frequency of our nation's electrical grid can serve as a silent witness to your recorded evidence.

To preserve the original content as it was saved by the recorder, and validate authenticity, recordings can be stored in the manufacturer's proprietary file Codec and format. This is common with high-end cameras, some audio recorders, and most digital video recorders (DVR). These proprietary recordings are usually denoted with file name extensions unique to the equipment manufacturer and, even when editing software is licensed to read the file's contents, the ability to resave into the same proprietary format remains a closely guarded secret. Unless the proprietary file algorithm has been hacked, which is quickly known within the forensic community, the file in question is generally deemed authentic simply because it is saved in a secure proprietary format.

Most audio, image and video formats include non-visible information, commonly referred to as metadata. Metadata(11) can reveal facts about the recorder's model number, user settings, physical location, and much more. These values can be compared to known case facts. When a recording is saved in a secure proprietary format, the metadata is also secure from tampering. However, if the recording is saved in an open format and Codec, then its metadata can be manipulated and resaved back into the same format and Codec. This is why open format manipulations are the most challenging to detect.

Another round of tests involve using more basic detective skills. For example, light travels in a straight line until some substance shifts its trajectory. For an image, or a single video frame, the test is to draw a straight line that touches upon an object, and the corresponding point on its shadow. This is repeated for all points on all objects that cast a visible shadow from the same light source. All the lines must intersect at the exact same point, the light source, even if that point is not viewable in the scene. If any line does not intersect at the light source, then the object touching this line is suspect and requires further examination(12).

A similar test is possible when the scene includes a mirror, glass or other flat reflective surface. If the scene includes objects and their reflections, then a lines can be drawn to pass through points on each object and that same point's corresponding reflection. Those lines must converge at the imaginary spot where the camera would have been located, if the reflection angle could have been straightened to allow light to pass straight through the reflective surface. Deviations from this rule identify suspect objects worthy of a deeper inspection.

With audio, the comparable test is to listen for repeated phases spoken with the same vocal fluctuations and pauses. This could be an indication of content copying. The expert will also listen for incomplete words and sudden changes in background noises, as these could indicate content edit points.

The next set of tests are structural. The goal is to match the characteristics of the recording to the known case facts with questions like:

Is the resolution, duration, Codec and file size of the recording consistent with the possible capture settings of the known recording device? For example, there are no known surveillance recorders who's native storage format is the video-DVD format used with common home DVD player. Yet video-DVD is a common format for discovery evidence due to its ease of playback. Video-DVDs are created through a post-recording process, and always introduces another layer of destructive quality degradation.

Does the metadata match the known case facts? For example, file metadata may include GPS coordinates or a creation date/time that deviates from the police report. This inconsistency would need to be explained.

Does the video include a title screen, subtitles, a picture-in-picture (PIP), zooming or speed changes? Except with special equipment, these items cannot originate at the time of the original video recording. Their existence can be proof of tampering and that discovery may not include "best evidence".

Does the recording suddenly drop out, spike, or skip periods of time? If the recording includes a reference time stamp or time signal, they can assist in determining if the cause is an anomaly, equipment issue or intentional act.

I testified in a case where the authenticity of a claimed original video in discovery was being challenged. That video included a smaller embedded PIP (Picture-in-Picture) that briefly displayed a zoomed view of the underlying recording. It was further observed that the video and PIP looped. The only possible non-tampered explanation was that, at the time of the depicted event's occurrence, someone was making that zoomed PIP and looping decisions, while having access to the necessary equipment to support this capability. The party in charge of the recording was not claiming this as the cause, and thus the existence of post-production video tampering was proven.

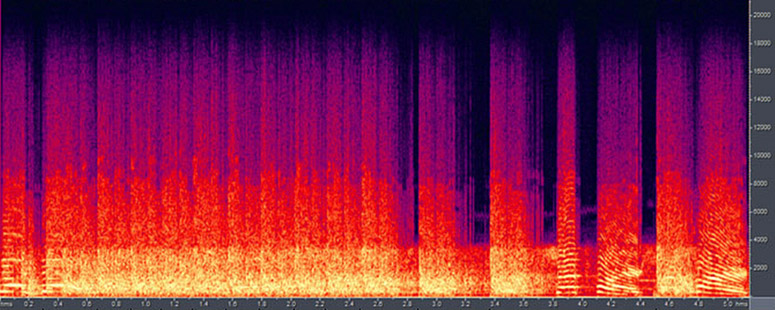

Another powerful authenticity test is to view a recording as a graph of frequencies over time, known as a Spectrogram (image #2). A forensic expert can analyze frequencies patterns for indications of tampering or proof of authentication. For example, a disruption in the perfect sine wave of a seemingly imperceptible hum might indicate a butt splice edit of the recording. The Spectrogram also makes it possible to discern similar sounds or voices, and to perform identity verification. This is an over simplification of an extremely complex topic(13).

Image #2: Spectrographic analysis identifying gun shots, echoes, and other similar

sounds

Audio tampering is tougher to prove due to the reduced data depth of audio versus video. I had a case where the audio evidence included several points of interruption, represented as brief clicking sounds. The hiring attorney was concerned over the integrity of the sentences surrounding those clicks. Normal sounds are visually represented as oscillations above and below a center line, called the Bias. Analysis of the audio signal showed that each click lasted a consistent two-tenths of a second and were located solely above the Bias line in a ramp-up pattern.

The ramp-up pattern is consistent with a mechanical tape recorded being stopped and later restarted. This pattern results from the microphone and recorder requiring a brief period of time to properly balance their connected electrical levels prior to responding with an AC sound signal. The existence of Stop-Start events contradicted the testimony of the opposing witness, and the evidence was shown to be a manipulation.

Since audio, video and image files are typically saved in a lossy format, the defects are easily detected. If someone manipulates the recording, they would need to save their altered version in a lossy format to justify the existence of the preexisting compression artifacts. However, that also means that the original file details will have undergone at least two iterations of lossy compression, while the recent tamper changes will have only undergone one, and thus be affected the most. These variances in content defects can be used to detect content tampering.

The test is to intentionally recompress image and video files one more time, so the areas with post-production changes will be disproportionally affected by a greater amount. By then subtracting the received file from the recompressed version of itself, the areas of potential tampering are highlighted. This process is known as Error Level Analysis

(ELA)(14) for images, or VELA for video, and it is a very effective tool. Color attributes in the ELA results can identify which editing software was used. VELA testing can identify video cropping and content splicing(15).

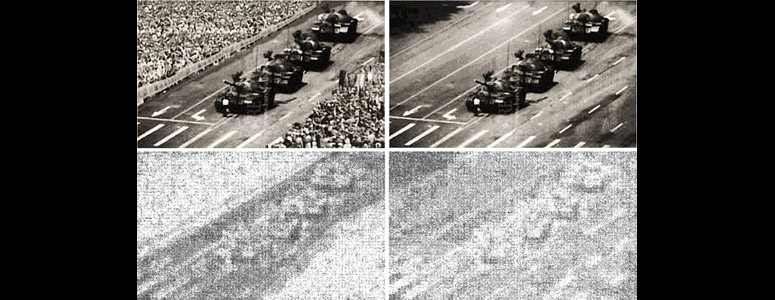

Below are two photographic versions of the 1989 Tiananmen Square protest that appeared in the international news. Below each photo (image #3) is its ELA test results, where white depicts the details lost during the latest round of compression. ELA testing identifies the left image as the fake by emphasizing the compression losses from the post-production addition of the crowd when compared to the tank and street details. On the right side is the authentic image, were no single high-contrast object stands out relative to the other objects in that same image.

Image #3: Tiananmen Square photos shown in international news and their ELA test

results.(16)

Authentication testing can be performed using geometry and statistics to determine the size of people and objects, or to calculate the speed of people and vehicles. Each calculated value will include a deviation range and confidence level determined by the resolution and relative size of the objects being measured. If the final measurements differ from the established case facts, the cause will need to be explored.

Modern video Codecs segment the viewed scene into a mosaic of tiny tiles called slices. You have likely experienced slices when your cable or satellite television signal was momentarily disrupted, and colored tiles replaced what should have been video content. When video is remotely acquired from a DVR, the feed will drop slices of video when the DVR's internet connection cannot keep pace with the video content. The lost data corrupts the validity of the evidence.

If the person acquiring the recording is on-site, the proper extraction method is to copy the native files. If that is not an option, they can use the recorder's menu to export or save a native or second generation copy. Another option is to use a forensic capturing tool. If the person lacks the training to do any of this, then the recorder should be taken to someone more capable. If none of this is possible, then steps must be taken to preserve the recording until someone with proper training is available. These steps are especially important with DVRs and answering machines since their recordings can be automatically or accidentally erased over time.

Unfortunately, it is far too common for the on-site person to ignore training and exhibit a total disregard for preserving the evidence. They may simply aim a camera at the DVR playback screen or use a second audio recorder to capture an answering machine message. In both scenarios, the person is manufacturing their own version of the evidence and it will be severely defective and unresponsive to enhancement services. Differences in resolution and speed will cause evidentiary data to be lost, noise defects to be introduced, and new data to be invented. The person who uses this type of destructive acquisition method must be able to justify their decision, and explain why they were unable to preserve the original recording.

Once a file has been preserved, it can be shared as needed. Copying a file, without processing it in any software, does not alter a file or its contents. Likewise, the use of emailing, uploading, USB thumb drives, CDs, and other means for moving data will preserve file integrity, unless the data is corrupted in transit.

This article has only covered a partial list of the authentication issues and tests available. While these test can prove a recording has been changed, no technology can recover what no longer exists. If a witness is digitally removed from a video, or the sound of a gunshot is silenced, all that can be determined is that something is missing.

Unlike what is depicted on television crime shows, science does have its limits. The best method to preserve evidentiary integrity is to have a qualified third party recover and handle the files, but that is rarely a viable option. Alternatively, the files can be hashed immediately upon their extraction.

A hash value(17) is an alphanumeric digital fingerprint generated solely from the structure, contents and internal metadata of an electronic file. Any change to the target file, regardless of its subtlety, will result in a different hash value. Hash values are

consistent to each file regardless of the software used to generate them. Two examples of free Window's hash generating software are "QuickHash" and "hashmyfiles". Both programs make it easy to hash entire folders and discs in a matter of seconds. The resulting values can then be shared to ensure that all parties are working from the same set of facts.

Forensic experts understand the importance of hashing every electronic file in their case. They will then perform authentication testing and, if testing generates new files, those will be hashed too. The expert will review relevant case facts, expert reports and case theories because the truth is the truth, regardless of the hiring party.

If opposing counsel does not perform authentication testing on their evidence, it may indicate their lack of confidence in that evidence. If testing was performed, your expert can verify that industry best practices were followed. Justice demands the truth, and forensic science can provide the answers.

This is not to say that file modifications are never warranted, but they must be documented and justified. For example, blurring faces or redaction may be required to comply with privacy rulings(18). Furthermore, while forensic enhancements are designed to improve the quality of details, they too are a type of file modification.

All of the testing available represents an extensive scientific toolbox, with new tools in development. Determining the right tools depends upon the specifics of the case and the type of recordings to be tested. A qualified expert will rely upon tools and methods established in generally accepted science and industry best practices. Their results can deliver powerful case support, and a devastating rebut to both the narrative and expert reports of opposing

counsel.

References:

1. Composite of Abraham Lincoln's head painted onto John Calhoun's body.

LINK

2. Specialized software verifies historic forgeries. LINK

3. Removing objects and people with automatic scene healing. LINK

4. Reconstructing lost or deteriorated parts of images and videos.

LINK

Inpainting, a tool for visual manipulation. LINK

5. North Carolina State Historic Preservation Office explaining the relationship between pixels and images.

LINK

6. Image types and formats. LINK

7. DCT quantization matrices visually optimized for individual images.

LINK

8. Video Codecs and decompressors. LINK

9. "A Study of the Robustness of PRNU-based Camera

Identification" by Kurt Rosenfeld, Taha Sencar, and Nasir Memon. LINK

10. Dartmouth paper on using Electric Network Frequency to authenticate a recording.

LINK

11. Programs like Mediainfo display the hidden metadata stored within a media file.

LINK

12. Exposing photo manipulation with inconsistent shadows. LINK

13. "What are spectrograms?" by Tim Carmell, 1997. LINK

14. Tutorials and a test-it-yourself proof of concept tool. LINK

15. Concept introduced by Dr. Neal Krawetz at the 2007 Black Hat conference.

LINK

16. Fake photos can alter real memories. LINK

17. MD5 checksum. LINK

18. "Legalities of street photography" by Dan Roe. LINK

Suggested

next article or this one

|