MAT - Multimedia Authentication Testing

A young man is on trial, and the primary exhibit is a video that the defendant claims to be a forgery. The attorney has a duty to investigate this claim, and the courts will exclude the evidence should this claim be true. So what happens next?

Modern software automates the process of adding or removing a suspect or their weapon from an image, with the end results passing the most scrutinous visual examination. The remedy comes from ever evolving testing software and methods based on scientific research and peer review. This a cat-and-mouse challenge and, while an absolute "Gotcha" conclusion is unlikely, an analyst can perform a suite of tests that collective lead to a substantiated summary opinion

regarding authenticity.

This is a real-world problem, and I have processed cases with edited phone conversations, modified accident photos, and altered dashcam footage. In one case, I was able to prove that

a mobile phone video depicting an altercation, had been edited to present the perpetrator as the victim.

Audio Video Forensic Analyst's are the Trier-of-Fact, and their primary duty is to the facts. Several groups, educational settings and web resources provide the required training and scientific understanding behind multimedia authentication

testing(1). Free on-line collaboration

forums(2) encourage forensic experts, newbies, scientists and scholars to share knowledge and refine their skills. This article provides a brief how-to look into the exciting world of multimedia authentication.

As with all forensic work, it is important to follow industry best practices. This means that the questioned files should be captured at their source, whenever possible, all work should occur in such a manner as to not alter the questioned files, and analysis should only be performed on exact file copies. If the file is of a questionable or unknown origin, as is usually the case, then best practices should still be followed from that point forward.

The analyst should only rely upon, and denote, facts that they can personally attest to, and generally that will be limited to data extracted from the questioned file, and the manufacturer’s documentation regarding the equipment known to have created the questioned file.

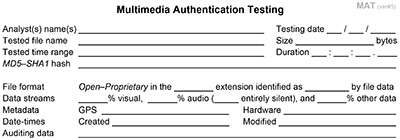

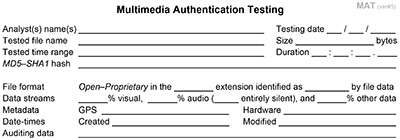

There are hundreds of available authentication tests, and the Forensic Working Group has consolidated the most effective ones into a simple Multimedia Authentication Testing (MAT) worksheet

form(3). Image and audio tests can be performed using free, or nearly free,

software(4).

However, video authentication software can be priced beyond most budgets. Fortunately, there is an effective work around.

Modern video codecs integrate visual contents by periodically storing complete reference “i” frames, between a series of

subordinate predictive “p” vector frames and bi-directional “b” frames. It is these “i” frames that contain the truest scene details. When video authentication software is

unavailable for a specific test, it can often be performed using image authentication software upon the uncompressed

extracted(5) video “i” frames.

The MAT form guides the Trier-of-Fact through each test in a logical workflow, with test results being expressed in simple data or boolean terms. For each file being tested, the MAT form serves as the analyst’s working notes, and each completed MAT form should be included as an exhibit to the analyst’s forensic report. Correlations drawn between multiple MAT forms should be expanded upon within this same report.

The first page of the MAT form is user-fillable using a web browser,

with the following two pages providing how-to instructions that

are supplemented upon by this article. To create a logical workflow, the MAT form is segmented into four sections: general, image-video, audio and summary.

GENERAL

The general section has a place to list the name(s) of each analyst performing the testing, and the date of testing. If the testing spans multiple days, it is customary to denote the initial testing date, and then denote additional dates in the “Additional tests, notes and observations” line of the summary section of the MAT form. The default date format is Month / Day / Year.

Next, the analyst lists the name of the file being tested, its file size in bytes (not the file’s disk allocation), its playing time duration to the thousandths of a second (leave this field blank for images), its hash (denoting either MD5 or SHA1), its listed file extension, and whether the file is in an open or proprietary format.

The file hash serves as a unique digital fingerprint, that can be compared to the hash of any file copies, to ensure that all parties on working on the exact same file data. MD5 remains the industry standardized hash, but is gradually being superseded by the more secure SHA1 hash algorithm.

Proprietary multimedia files often require special playing software from the recorder’s manufacturer, and that software might include an option to authenticate the file in question. Most people assume that such a proprietary file must be authentic and does not require further testing. However, some proprietary formats are no longer secure, and the file format can mask post-production manipulations. Whenever possible, the underlying video and/or audio media streams should be extracted so that MAT testing can continue.

The next MAT step is to dig into the file’s metadata and document the expected file extension (which may differ from the actual extension), the allocation percentage of each stream (visual, audio and other), whether the audio steam is silent, any file GPS coordinates, audit data regarding when the file was created and modified, the hardware used to create the file, and any other determinable chain-of-custody or origin details.

If the stream percentages do not add up to 100% (or 99% in the case of rounding) then the file may have an undetected stream (e.g. timing, subtitle, notes), hidden embedded data (steganography), or a corrupted end of file indicator. If the GPS coordinates, or date-time data, exist and do not match the expected case facts then further investigation may be warranted.

It is common for the file’s metadata to list several different date-time data sets that may refer to when the file recording started, when that recording finished, when it was written from memory, and select follow-up actions performed upon that file. Each date-time value might be expressed in local time, universal time (UTC), or both. This embedded date-time data is not to be confused with file related date-time data that may exist within the analyst’s computer operating system.

Nearly all real-world multimedia files are saved with destructive compression using a lossy codec (e.g. JPG, MP3, MP4), which introduces irreversible artifact defects into the file data. Each iteration of lossy compression introduces an additional set of defects, and modifies any preexisting defects. Those defects can interfere with some forensic tests, while creating new data to assist other forensic tests.

If the file in question has a silent audio stream, this could indicate that the audio stream is a structural placeholder created when a more native video-only file was transcoded into its current format. While being a later generation of a native recording is not proof of content manipulation, it is evidence that the tested file is not an exact copy of the originating recording.

If a video’s audio stream is silent except for electrical noise, then that noise can be further examined in determining the correlated video’s trustworthiness. This is an example of the Locard exchange principle which states that, whenever two objects come in contact, a transfer occurs. In our example, the destructive defects caused by transcoding, created new audio details useful in evaluating the video’s trustworthiness. Several of the following forensic tests benefit from similar data relationships.

IMAGE - VIDEO

The next section of the MAT form only applies to image or video files. Here, the analyst denotes the total number of video frames, whether those frames are interlaced or progressive, the visual color space, stream resolution, stream data rate, stream codec, and the stream’s writing library. This information can then be compared to the known capabilities of the recording device. Additional metadata is then extracted to denote the file’s framerate, whether the playback speed deviates from a natural flow, if duplicate frames are detectable, and whether any visual time gaps exist.

Video recorders save each moment only once, and often do so at a variable framerate. However, when a video is created using a screen capture tool (usually at a high framerate to avoid missing unique moments) or transcoded to a specific framerate (e.g. 29.2971 frames-per-second for DVD compatibility), the resulting video is likely to contain duplicate copies of each unique moment, and the existence of these duplicate frames is compelling proof that the video being tested is a later generation. Some of these duplicate frames may blend two sequential moments in time, thus creating false evidence frames.

If the examined video’s playback speed appears unnatural, then the cause should be investigated. For example, the viewed scene can appear unnaturally jerky when a native variable speed video has been resaved into a fixed framerate, thus forcing the playback speed to deviate from how the events were recorded.

Time gaps can also be caused by motion activated systems that begin recording at a predefined time (pre-record)

prior to a scene change that exceeds some threshold, and then continues for a pre-defined time (post-record) beyond when the detected motion ceases. Another cause of time gaps is with older recorders that must periodically offload video clips from memory to their hard drive prior to

that memory being able to retain new video events.

The next MAT section lists numerous Pass/Fail tests, and not all of these tests apply to every file type. These tests help guide the analyst as to what issues require further examination.

Such test results are typically presented by easy to understand visuals.

PRNU(6): Photo Response Non-Uniformity is best explained by the fact that camera images are made from Silicon, an organic material with microscopic impurities. The pattern created by these impurities creates a subtle unique digital fingerprint that gets embedded into every image created by that camera. This pattern is robust and can survive cropping, resizing, and lossy compression.

If PRNU extraction is possible, the analyst can determine if the PRNU

matches between the tested file and a control recording (or simply other

test recordings claimed to originate from the same exact device).

Data cloning(7): This is a test for intraframe manipulation, where

a group of pixels are replicated elsewhere in the same image or video

frame, and thus a likely point of content manipulation.

PCA editing: Principal Component Analysis isolates an image or video into planes defined by

their energy levels (essentially contrast differentials), where edge details of manipulations may appear in a different plane from its associated content.

DCT editing(8): Discrete Cosine Transform isolates the high AC energy component, and can detect double quantization artifacts resulting from an image

or video that has undergone multiple lossy compressions, and

differentiate more recently changed content that was only compressed once.

JPEG editing (image only): Images are usually saved in the JPEG Codec, where post-production manipulations and subsequent JPEG resaving can leave identifiable trace data.

HSV-Lab histogram(9): Visual contents are usually saved in a YUV or RGB color space. Conversion into the HSV or Lab color space can be

an effective method of detecting manipulations.

Luminance gradient(10): In nearly all images and videos, illumination primarily originates from a single light source, where deviations in the expected illumination/shadow patterns

are clearly marked to help identify post-production manipulations.

Chromatic aberrations: Visual details in a planar color space are represented by pixel pairs with shared

data. Post-production cropping can break those pairs, and some

post-production manipulations cause color bleeding, both of which are

amplified by this test.

Double compression(11): When an image or video has been resaved, artifacts resulting from the first compression can survive as a noise subset, and its existence can prove that the analyzed data is not a native recording.

ELA-VELA(12): Lossy compression reduces high frequency details and, with

each additional iteration of lossy compression, fewer high frequency changes will occur. Error Level Analysis, and its Video counterpart, work by applying lossy compression, and then subtracting that compressed version from the tested file.

Since post-production manipulations would have undergone fewer total iterations of

lossy compression, versus the originating details, those changes can be displayed as

high contrast pixels. The color patterns found within the test results can infer which software

produced the manipulation but, as of early 2021, that library has not matured enough to serve as reliable evidence.

Thumbnail(13) (image only): Depending upon the format, images are expected to have an embedded thumbnail, and that thumbnail is expected to visually represent the full image.

Noise(14): With rare exception, images and videos are compressed in a lossy manner, where file size reductions occur at the cost of introducing visual defects. Noise profile deviations may indicate edited contents originating from a different source.

PDF files can be imported as an image to examine deviations to the

expected noise pattern.

Huffman table(15): Lossy

decompression is performed using a mathematical table of values embedded

in the resulting file, and a expected recording/encoding device should

match the detected data table.

PsuedoColor(16): Subtle pixel color differences are randomized into extreme color shifts (luminosity and temperature) to detect clipping or masked/cloned content.

Threshold tests: Common threshold tests include Temporal contrast, temporal diffusion, spatial predictability, temporal noise diversion, temporal histogram correlation, non‐monotonous motion. These tests are highly effective on videos that have undergone numerous re-compression and resizing (e.g. social media), and

these tests are best suited to identify moments worthy of further investigation.

AUDIO

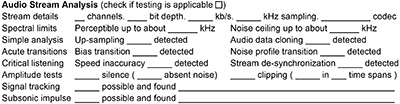

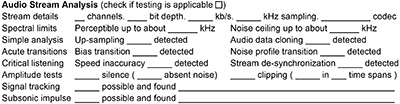

This MAT section relates to audio files, or the audio streams from a video file. The analyst first defines the number of audio channels, the data bit depth, the data rate, the data sampling rate, and the stream’s codec. Then the analyst denotes the upper sound frequency, and the upper noise frequency.

Be aware that non-forensic audio tools can alter this data during their

import process.

If the upper sound frequency is about 4kHz, but the file is saved higher than an 8kHz sample rate, then one can deduce that a more native low quality recording was resaved into a higher sampling rate. While this does not prove file tampering, it does raise chain-of-custody questions.

A similar conclusion may be applicable if the noise ceiling is far below the sample rate. Of course, microphone and codec limitations

should be considered when making such an assertion. If there is a change to the speech or noise frequency profile somewhere within the recording, this could be evidence of content insertion. Similarly, sudden bias (the DC voltage component) changes could indicate content insertion. The MAT form provides a place to denote the existence of an acute bias transition

and/or an acute change to the noise profile.

Another detection tool is the analyst's own hearing, called critical listening, where one listens for unnatural audio playback speed, a de-synchronization between audio channels, or a de-synchronization between the audio and video channels. The MAT form has a place for these findings along with whether the audio stream contains silence, and whether that silence is devoid of any expected compression noise.

Next list whether the audio has amplitude clipping, and in how many time spans that this clipping is found. Auditory clipping or silence is not necessarily an indicator of manipulation, but its existence is expected to be consistent with the expected amplitudes of the events occurring at that portion of the recording.

If clipping occurs more than 99 times within a tested file, it is

generally listed as ">99".

Audio recordings may include a parasite signal (e.g. rhythmic mechanical sound, 60Hz electrical signal, etc…), even if such a sound is imperceptibly quiet. By isolating and amplifying such a signal, breaks in

that signal's consistency can identify content manipulation.

Audio recording are not expected to include sounds that exceed the range of the recorder’s microphone.

Frequencies above the microphone's limit may indicate uneventful

compression artifacts. However, a pulse in the subsonic range (below 20 Hz) can indicate a pause-resume event resulting from the microphone re-energizing.

The MAT form includes a place to list the results from tracking and subsonic testing.

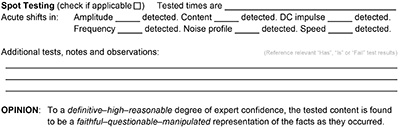

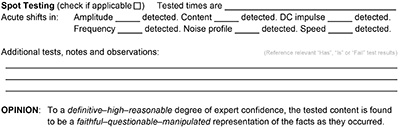

SUMMARY

The previous MAT sections are likely to identify moments worthy of greater inspection. The MAT form has a place to denote these moments along with the results from

their refined testing for acute changes in amplitude, content, bias, frequency, noise, and speed.

The next MAT section is for listing additional tests, notes and observations (e.g. variable frame rate, GOP allocation, 50Hz/60Hz, harmonics, Bayer pattern, lens dimples, bias drift, special spot testing results, etc…). This is also where previous concerning MAT findings are expanded upon, correlations between MAT findings are detailed,

plus where any supportive notes (e.g. chain‐of‐custody), other observations (e.g. damaged media), and any correlations to external data (e.g. case facts, other files, testimony) are detailed.

The last MAT step is where the analyst states their opinion (“faithful”, “questionable” or “manipulated) and confidence (“definitive”, “high” or “reasonable”) regarding the trustworthiness of the tested file. A confidence degree of “reasonable” means more likely than not, “high” means highly compelling, and “definitive” means without question. A finding of Faithful means "True to the original", Questionable means "Baseless or unsubstantiated" and Manipulated means "False or misleading".

The analyst should use “reasonable” and “questionable” as their opinion’s starting point, and then weigh their test results to form a more comprehensive summary opinion. It is

possible for the MAT form to come to one degree of confidence, and then be part of a report that lists a higher degree of confidence after integrating MAT results from multiple files and additional facts beyond the scope of the MAT form.

Proving that a multimedia file is altered evidence, or that a file is trustworthy, has the power to identify the guilty and free the innocent. However, a faulty opinion can cause significant harm. If you don’t know what you are doing, don’t guess. Seek out guidance or get a second opinion.

References:

1. Two great examples are https://eforensicsmag.com/course/digital-video-forensics/ and https://eforensicsmag.com/course/dvm-anti-forensics-counter-anti-forensics-w34/

2. https://www.linkedin.com/groups/2461639/

3. The MAT form is a public domain collaboration of the Forensic Working Group https://forensicworkinggroup.com/

4. Operating system’s properties tab, Mediainfo (https://mediaarea.net/en/MediaInfo), HashMyfiles (https://nirsoft.net/utils/hash_my_files.html), HxD (https://mh‐nexus.de/en/hxd), VideoCleaner (https://videoCleaner.com), (http://metainventions.com/photodetective.html), (https://audacityteam.org)

5. A feature of both VideoCleaner (https://videoCleaner.com) and Amped FIVE (https://ampedsoftware.com/five)

6. International Workshop on Information Forensics and Security (WIFS). IEEE, 2014.

J. Lukas, J.Fridrich and M. Goljan, "Detecting digital image forgeries using sensor pattern noise", eProceedings of SPIE Electronic Imaging, Securety, Steganography and Watermarking of Multimedia Contents, pp. 0Y11- 0Y11, 2006.

L. Gaborini, L. Bondi, P. Bestagini and S. Tubaro, “Multi - clue image tampering localization”, 2014 IEEE

M. Goljan, J. Fridrich, T. Filler, Large scale test of sensor fingerprint camera identification, in: IS&T/SPIE Electronic Imaging, International Society for Optics and Photonics, 2009, pp. 72540I-72540I.

7. C. Barnes, E. Shechtman, A. Finkelstein, and D. B Goldman. PatchMatch: A Randomized Correspondence Algorithm for Structural Image Editing. ACM Transactions on Graphics (Proc. SIGGRAPH) 28(3), Aug. 2009.

D. Cozzolino, D. Gragnaniello, and L. Verdoliva, A novel framework for image forgery localization, arXiv preprint arXiv:1311.6932, presented at IEEE International Workshop on Information Forensics and Security, Nov. 2013.

8. S.Murali, Govindraj B. Chittapur, Prabhakara H.S, & Basavaraj S. Anami, International Journal on Computational Sciences & Applications (IJCSA) vol. 2, no.6, 2012 "Comparison And Analysis of Photo Image Forgery Detection Techniques" pp. 45-46 https://arxiv.org/ftp/arxiv/papers/1302/1302.3119.pdf

W. Wang, J. Dong, and T. Tan, Digital Watermarking: 9th International Workshop, IWDW 2010 Seoul, Korea Section 3: "Tampered Region Localization of Digital Color Images" pp. 125-127

Z. Lin, J. He, X. Tang, Chi K. Tang "Fast, automatic and fine-grained tampered JPEG image detection via DCT coefficient analysis", Journal Pattern Recognition, Vol. 42, pp. 2492-2501, 2009.

9. Reference: Anil. K. Jain, Fundamentals of Digital Image Processing, Prentice Hall, p.60-71, 1989.

10. N. Krawetz, "A picture's worth: Digital image analysis and forensics", www.hackerfactor.com, 2007.

R. Gonzalez and R. Woods, "Digital Image Processing (3rd ed.)", Prentice Hall, pp. 165–168, 2008.

11. T.Bianchi, A.Piva, "Image Forgery Localization via Block-Grained Analysis of JPEG Artifacts", IEEE Transactions on Information Forensics & Security, vol. 7, no. 3, June 2012, pp. 1003 - 1017.

T. Bianchi, A. De Rosa, and A. Piva, “Improved DCT coefficient analysis for forgery localization in JPEG images,” in Proceedings of IEEE International Conference on Acoustic, Speech and Signal Processing, 2011, pp. 2444–2447.

12. Hitesh C Patel and Mohit M Patel, IJCA (0975-8887) Volume 111, No. 15, 2015 "An Improvement of Forgery Video Detection Technique using Error Level Analysis" pp. 26-28 http://research.ijcaonline.org/volume111/number15/pxc3901508.pdf

H. Farid, "Exposing Digital Forgeries from JPEG Ghosts", IEEE Transactions on Information Forensics and Security, vol. 4, pp. 154-160, 2009.

N. Krawetz, "A picture's worth: Digital image analysis and forensics", www.hackerfactor.com, 2007.

W. Wang, J. Dong and T. Tan, "Tampered Region Localization of Digital Color Images Based on JPEG Compression Noise", Proceedings of the 9th International Conference on Digital watermarking, pp. 120- 133, 2010.

Public domain works by: kaʁstn, George Chernilevsky, Dr. Neal Krawetz, and Doug Carner

13. CCITT Recommendation T.81, ISO/IEC 10918-1:1994, "Information technology - Digital compression and coding of continuous-tone still images: Requirements and guidelines ", 1992.

E. Kee, M.K. Johnson and H. Farid, "Digital image authentication from JPEG headers", IEEE Transactions on Information Forensics and Security, vol. 6, pp. 1066-1075, 2011.

14. X. Pan, X. Zhang and S. Lyu, "Exposing Image Splicing with Inconsistent Local Noise Variances ", IEEE International Conference on Computational Photography, pp. 1-10, 2012.

15. E. Kee, M.K. Johnson and H. Farid, "Digital Image Authentication from JPEG Headers", IEEE Transactions on Information Forensics and Security, vol. 6, pp. 1066-1075, 2011.

16. Advances in Visual Computing: 7th International Symposium ISVC 2011 Las Vegas, NV "A Non-intrusive Method for Copy-Move Forgery Detection" pp. 522-523 -and- Public domain works by: Dschwen, and

Aimcotest).

Article originally

published February, 2021

Suggested

next article

|